Yunsung KimGoogle Scholar / Email / GitHub / Twitter / LinkedIn I am a PhD candidate in the Computer Science Department at Stanford University, where I am advised by Prof. Chris Piech. My research aims to build computational tools that can help instructors understand and aid their students more efficiently and effectively. I often use ideas from probabilistic modeling, machine learning, and artificial intelligence. I was an Applied Scientist Intern (Summer 2022) in the Learning Sciences Organization at Amazon, where I was supervised by Prof. Candace Thille. Before coming to Stanford, I finished my undergraduate studies at Columbia University, where I was fortunate to have worked with Prof. Augustin Chaintreau and Prof. Martha Kim. |

|

Teaching |

One of my greatest sources of inspiration for my research is my love for teaching! Here are the courses which I've been involved with:

- (Summer 2023) CS109: Probability for Computer Scientists (Course Instructor)

- (Spring 2023) EDUC 259B: Education Data Science Seminar (Guest Lecture, 04/14/2023)

- (Spring 2022) CS109: Probability for Computer Scientists (Teaching Assistant)

- (Fall 2021) CS109: Probability for Computer Scientists (Teaching Assistant)

- (Summer 2021) CS109: Probability for Computer Scientists (Teaching Assistant)

- (Spring 2020) CS106A: Programming Methodologies (Teaching Assistant)

Recent Research |

|

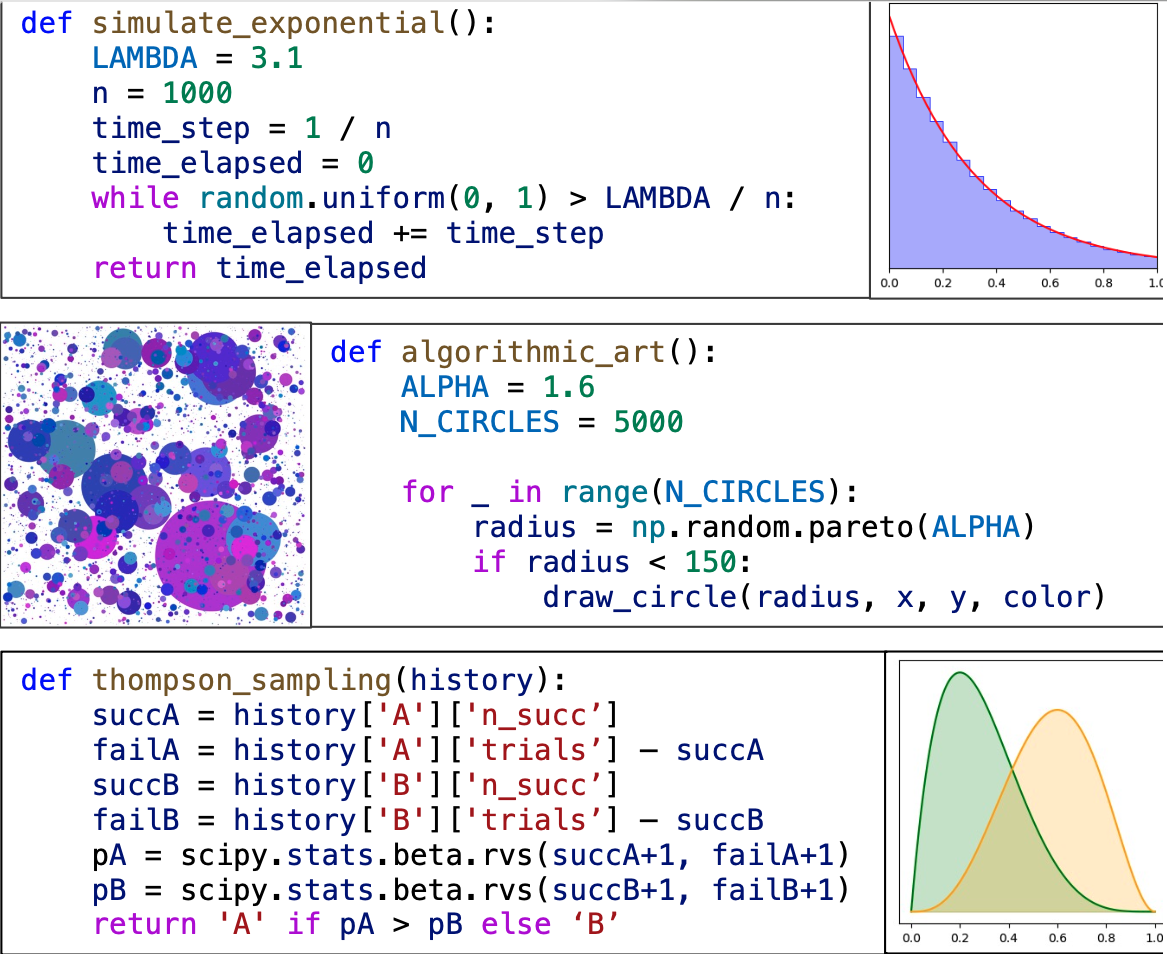

Grading and Clustering Student Programs That Produce Probabilistic OutputYunsung Kim*, Jadon Geathers* (Equal Contribution), Chris Piech EDM '24: Proceedings of the 17th International Conference on Educational Data Mining, 2024 We develop an open-source assessment framework called StochasticGrade for programs that produce probabilistic output. Based on hypothesis testing, StochasticGrade offers an exponential speedup over standard two-sample hypothesis tests on identifying incorrect programs and allows users to set the desired rate of misgrades and choose a suitable “disparity function,” which measures the dissmilarity between two samples throughout the grading process. Moreover, the features calculated by StochasticGrade can be used for fast and accurate clustering of student programs by error type. |

|

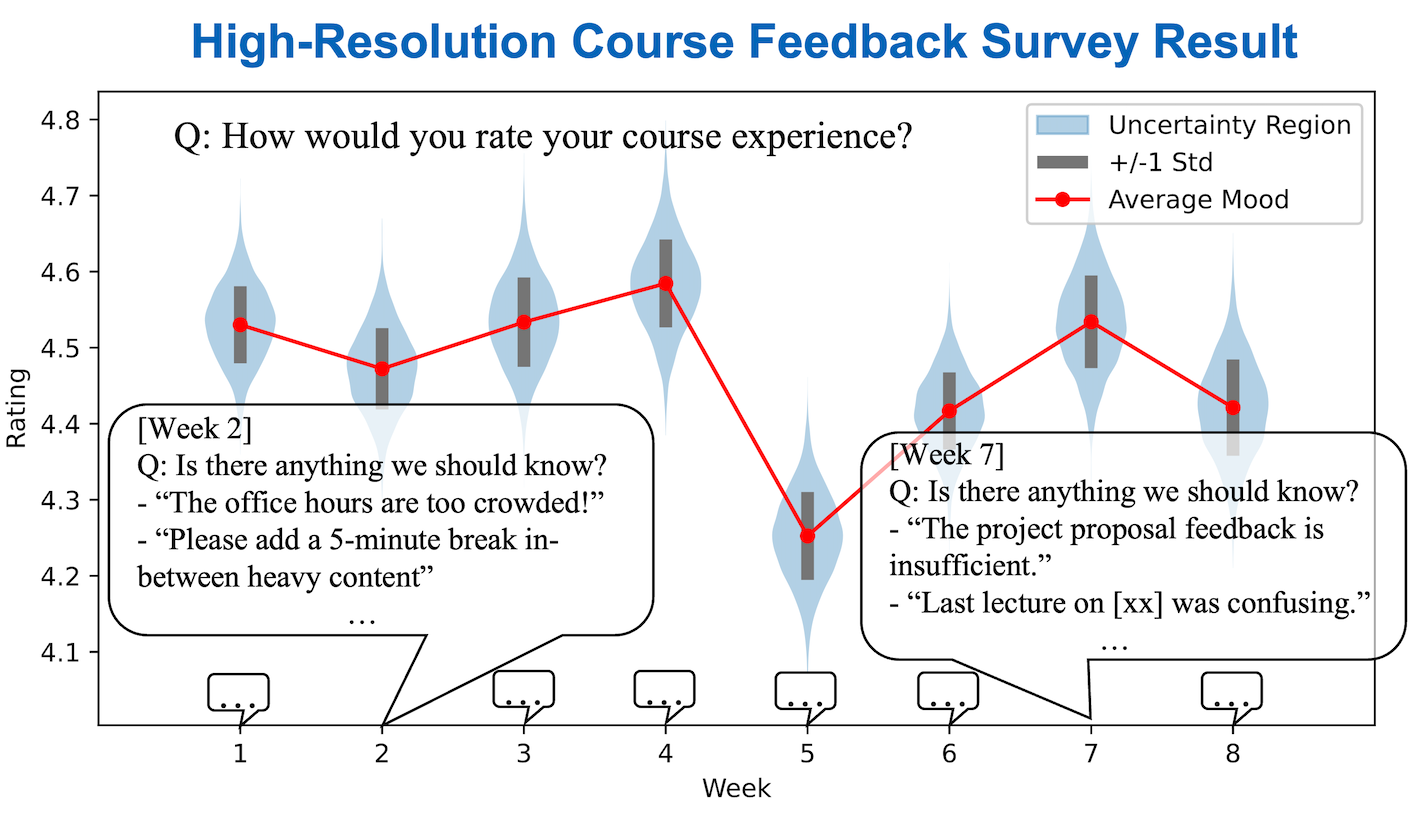

High-Resolution Course Feedback: Timely Feedback Mechanism for InstructorsYunsung kim, Chris Piech L@S '23: Proceedings of the Tenth ACM Conference on Learning @ Scale, 2023 paper / code / website / High-Resolution Course Feedback (HRCF) is a tool for minimizing the delay between when an issue comes up in a course and when the instructors get feedback about it. It requests feedback from a small random subset of the students each week, but surveys each student exactly twice per term. We demonstrate that without extra effort compared to mid/end-of-term surveys, HRCF can provide constructive and actionable feedback early on. |

|

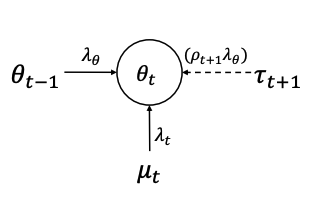

Variational Temporal IRT: Fast, Accurate, and Explainable Inference of Dynamic Learner ProficiencyYunsung Kim, Sree Sankaranarayanan, Chris Piech, Candace Thille EDM '23: Proceedings of the 16th International Conference on Educational Data Mining, 2023 paper / code / A new algorithm for inferring dynamic learner proficiency when learning occurs alongside assessment. While retaining high inference quality, VTIRT is 28 times faster than the fastest existing inference algorithm and provides interpretable inference by its modular algorithm. |

|

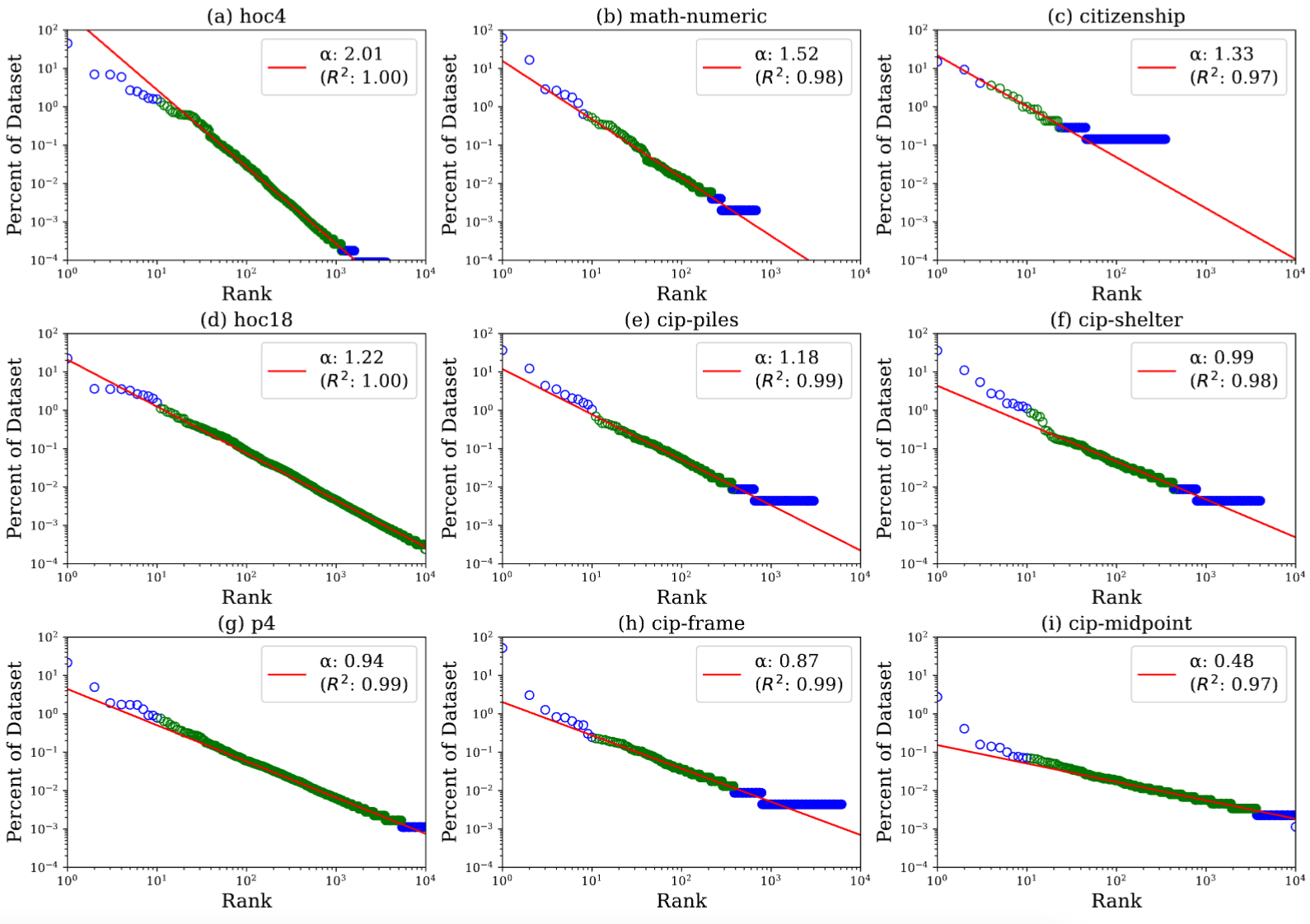

The Student Zipf Theory: Inferring Latent Structures in Open-Ended Student Work To Help EducatorsYunsung Kim, Chris Piech LAK '23: 13th International Learning Analytics and Knowledge Conference, 2023 paper (NB: Some figures are not properly displayed in Chrome) / code / Are there structures underlying student work that are universal across every open-ended task? We demonstrate that, across many subjects and assignment types, the probability distribution underlying student-generated open-ended work is close to Zipf’s Law. We discuss how inferring this latent structure can help classrooms and develop an inference algorithm. |

|

Adapted from Leonid Keselman's fork of John Barron's website. |